How Does Qwen3 Work?

An in-depth look at Qwen3 — Alibaba Cloud’s open-source large language model that combines dynamic reasoning modes, Mixture-of-Experts design, and scalable deployment for global applications.

The world of large models feels like a race of speed and intelligence every day. Just as the open-source community is getting used to the rhythm of “bigger, stronger, faster,” Alibaba Cloud introduced Qwen3 — a new-generation open-source LLM that can handle everyday Q&A with ease, yet dive into deep reasoning when needed. If you’re wondering “how does it actually pull this off?”, let’s break it down.

Why is Qwen3 Worth Paying Attention To?

Qwen3 marks the latest milestone in the Qwen family, with several standout features:

- Dual thinking modes: Switches between non-thinking mode for quick answers and thinking mode for complex reasoning.

- Mixture-of-Experts (MoE) architecture: Even at massive parameter scales, only a subset of expert modules activate for each query, saving compute without sacrificing quality.

- Scalability: From lightweight 0.6B models to huge 235B sparse variants, it fits both enterprise and personal needs.

- Extended context: Supports up to 128K tokens, perfect for documents, codebases, or multimodal conversations.

- Multilingual training: Built from data in 119 languages, making it a genuinely global model.

Sounds a bit like the Swiss Army knife of LLMs, doesn’t it?

What’s Special About Its Architecture?

Unified Reasoning Framework

Unlike other ecosystems that separate “chat models” and “reasoning models,” Qwen3 keeps both brains in one head:

- Non-thinking mode: Low latency, fast replies for casual or lookup tasks.

- Thinking mode: Kicks in for proofs, plans, or multi-step logic by unlocking extra transformer layers and attention heads.

Developers can even manually control the switch via API parameters, adding flexibility for real-world apps.

MoE Sparse Design

In Qwen3’s MoE versions, hundreds of experts exist, but at runtime, only a few relevant ones are activated:

- Compute cost drops dramatically compared to dense models.

- On specialist hardware (like Cerebras), Qwen3 reports tens-of-times speed-ups against peers.

How Does It Actually “Move”?

- Input evaluation: First, the model judges difficulty.

- Fast vs. deep response:

- Simple task → Non-thinking mode, near-instant output.

- Hard task → Thinking mode, step-by-step reasoning.

- Dynamic switching: If the question grows complex mid-inference, Qwen3 adapts.

This adaptive computing mechanism is the secret sauce for balancing speed and depth.

Deployment & Developer Experience

Qwen3 is fully open source under Apache 2.0. You’ll find it on GitHub, Hugging Face, or try it instantly on MixHub’s Qwen3 Next page.

More goodies:

- Supports quantization down to 4–8 bits for low-power devices.

- Already works smoothly with Ollama, LM Studio, SGLang, vLLM for local inference.

- For image capabilities, check out Qwen Image Editing and Qwen Image Generation.

Performance & Benchmarks

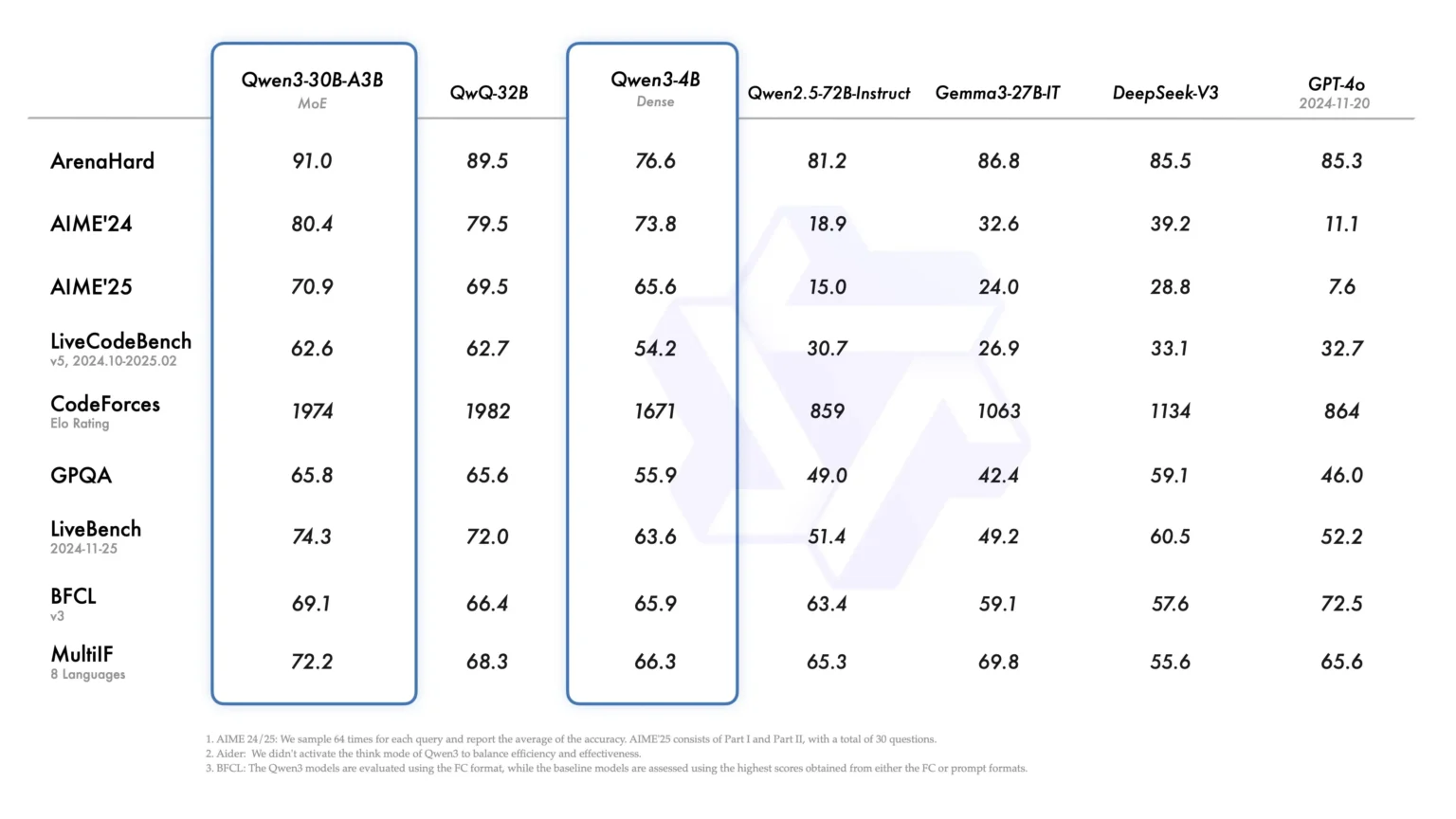

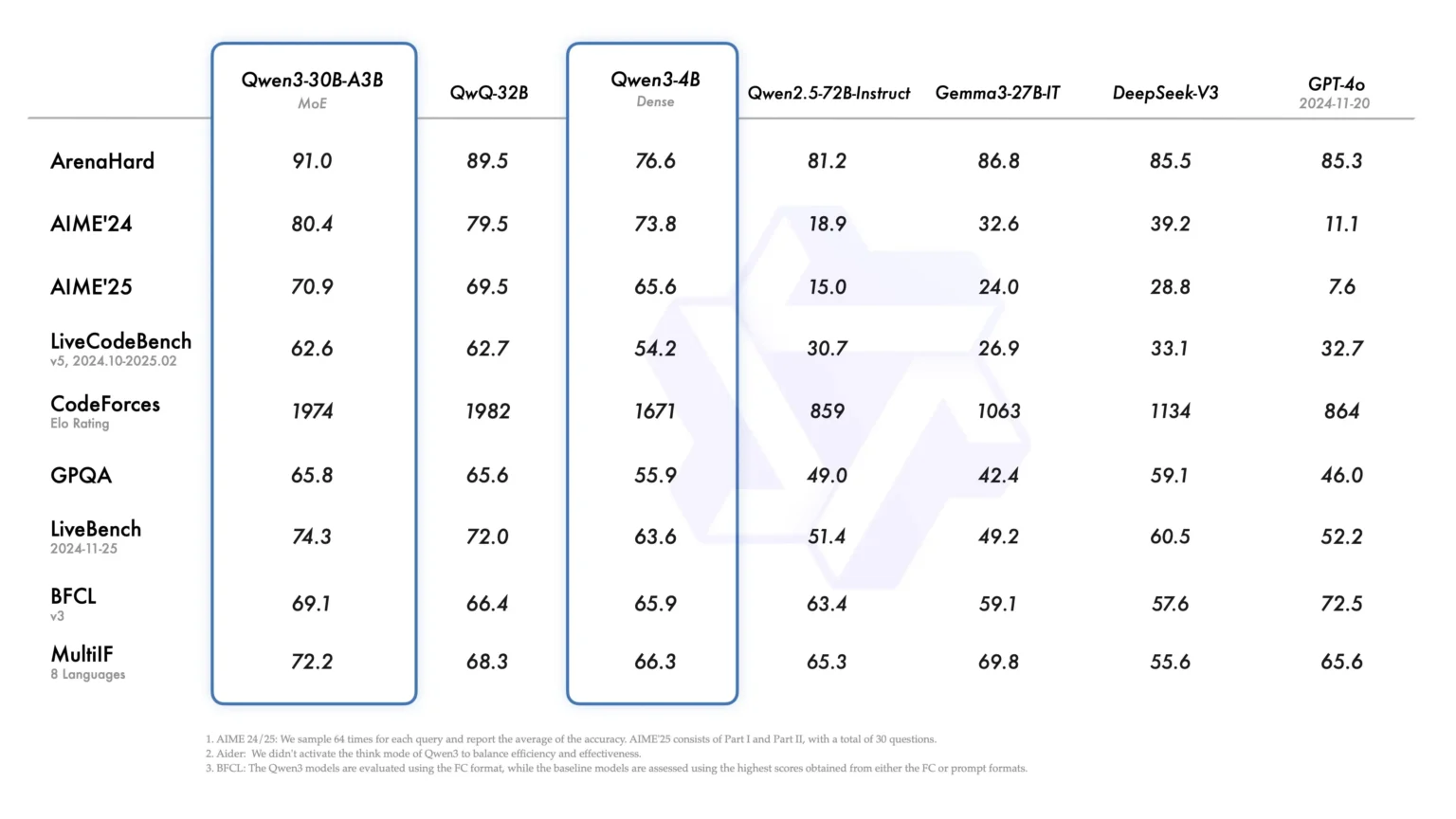

On the LiveBench leaderboard, Qwen3-235B MoE ranks top ten among all models, taking first place in instruction-following. Media checks also note its strong edge in math and code — going toe-to-toe with closed-source titans like GPT-4 and DeepSeek R1.

The Challenges Ahead

Of course, it’s not flawless:

- Hallucinations: Still misfires on factual or ambiguous prompts.

- Extreme quantization: 1–2 bit experiments collapse quality, so the “smartphone brain” dream isn’t here yet.

But those are exactly where the next breakthroughs will likely happen.

Conclusion

Qwen3 isn’t just chasing bigger parameter counts. Through dynamic mode switching + MoE sparse design, it merges smartness with efficiency. And because it’s open-source, it’s more than just an Alibaba project — it’s an open invitation: let’s build the next wave of intelligent systems together.

If you want to try it hands-on, start at Qwen3 Next or download a small model locally. Whether you’re researching, prototyping a startup, or just curious, Qwen3 is worth exploring.